Linked Research: An Approach for Scholarly Communication

This article is superseded by Linked Research on the Decentralised Web.

- Identifier

- https://csarven.ca/linked-research-scholarly-communication

- Notifications Inbox

- inbox/

- In Reply To

- ACM Hypertext 2016 Call for Contributions

- Published

- Modified

- License

- CC BY 4.0

Abstract

The future of scholarly communication involves research results, analysis and data all being produced, published, verified and reused interactively on the Web, with ‘papers’ linking to and from each other at a granular level. The academic process of peer review is increasingly becoming open, transparent and decentralised. More broadly, the mechanism for global knowledge sharing is becoming an ongoing conversation between experts, policy makers, implementers, and the general public. This vision is radical, and getting there requires understanding of, and change in, a number of interrelated areas. In this article we break down the problem space and define requirements for advancement towards a Web-based ecosystem for scholarly communication: Linked Research. We discuss our strategy for tackling each of these areas. This includes how we can build on and combine existing well-known technologies and practices for digital publishing, social interactions, decentralised data storage, and semantic data enrichment. We provide an initial assessment of our proposed strategy through an example implementation of tooling which sets out to meet the requirements.

Keywords

Introduction

You never change things by fighting the existing reality. To change something, build a new model that makes the existing model obsolete

– attributed to Buckminster Fuller.

One of the most widely debated questions in the scientific community is the impact of digitisation on scholarly communication and knowledge exchange; the Internet and the Web have radically changed the processes of scholarship [1]. In this article, we propose that digitising, and indeed Webizing [2], scholarly communication can provide greater coherency between and within academic fields, as well as make knowledge more accessible to researchers and citizens alike. Many forms of scholarship are enabled by these technology changes, be it the ability to perform social science using social media data, or large scale data processing for physics. Despite efforts through Open Access (OA) movements and the increasing availability of online publications, the formal communications mechanisms used by scholars do little to take full advantage of either the capabilities of online media, or the cultural shift towards sharing and public commentary on social networks [3, 4].

On examination, it becomes clear that there are many interrelated problems to be tackled before it will be possible to fully realise the possibilities offered by modern Web technologies in the academic space. Many of these problems are relevant in a number of domains outside of scholarly publishing, and they are each to different degrees being considered in existing or ongoing work, in both academia and industry. Our first contribution is an overview of different issues, briefly summarising the extent to which they are addressed in current work, and how they relate to and depend upon one another in the context of scholarly communication. We attempt to articulate a complete picture of the problem space of scholarly communication by defining requirements in response to the problems described in existing literature, or observed ‘in the field’. These requirements fall into three broad categories:

- Publishing and ownership of documents and data.

- Discovery and reuse of knowledge and data.

- User experience and tooling.

From these requirements, we derive user stories, and explain how these may be repurposed as an acid test or criteria against which to evaluate proposed solutions. Our strategy for addressing the problems identified involves building upon existing technologies, standards and best practices, each of which we discuss in detail in section 4.

Finally, we describe an implementation of the Linked Research concept for authoring, publishing and annotating research articles in a decentralised manner, based on native Web technologies. We demonstrate how multi-modal content such as video, audio, code examples and runnable experiments can be embedded into research publications, and showcase how different views of the content can be rendered for different devices (e.g. screen, print, mobile) or audiences (e.g. slide shows). A particular strength of our approach is the integration and linking of data, which can automatically update tables and diagrams when the underlying data changes. All content can be annotated and represented using semantic knowledge representation formalisms to facilitate better search, exploration, retrieval and linking of concepts and ideas. Further, our implementation takes advantage of common social media practices to enable sharing and commentary around scholarly work in both ongoing dialogue between interested parties, and more formal conference and workshop settings. We build upon emerging standards in personal data stores, annotations and social notifications to allow our implementation to be completely decentralised, reliant on no central system or hub.

Requirements

The Linked Research requirements provide a framework for thinking about how each of the various parts of the problem space of scholarly communication are connected to each other. By establishing a framework, Linked Research can be seen as both a benchmarking exercise as well as a technique to connect various technologies and approaches. The topic areas are described below, and we have identified several interdependencies between them, whereby aspects of one problem are much more easily solved alongside of or on the back of another.

Socially, Linked Research is embedded within the Open Science movement [5]. However, it explicitly calls out the role of technical choices in meeting open science goals.

Publishing and ownership

Open Access: To better enable scientific progress, research work - from raw results to completed analysis - should be widely available and accessible. Maximising reach in this way increases the likelihood of further scientific progress and new discoveries [6]. Ideally this is achieved by way of free-of-charge access and reuse of all material, including code, media, source text, data and metadata, so knowledge may be distributed regardless of wealth, social status or geographical location of either the researcher or the consumer. This requirement is to some extent borrowed from the well known Open Access [7] movement (though aiming to avoid the additional restrictions often imposed by publishers such as Article Processing Charges or Gold Access fees).

Access control and attribution: In many fields, researchers are discouraged from publishing unfinished, inconclusive or ongoing work, or publishing outside of ‘official’ venues by the risk that their work will be plagiarised or reused by another without permission or due credit. To facilitate open scientific discourse, technologies for publishing must afford a trust that sharing work will not disadvantage the author. Thus the author must have means to unambiguously attach their identifying information to their own work, and selectively grant and revoke access to others during the research process. Presently there are many services offering identifiers to creators of scholarly work: the Library of Congress Linked Data Service for personal and family names and corporate bodies, Virtual International Authority File (VIAF), International Standard Name Identifier (ISNI) and the Getty’s Union List of Artist Names (ULAN), ResearchGate, Google Scholar, Scopus Author Identifier, ResearcherID, and Open Researcher and Contributor ID (ORCID). These systems are minimally, if at all, interoperable without human intervention, so creating multiple profiles is common practice. Some of these systems interoperate with – not via open standards but by proprietary solutions – certain of the others, e.g., ORCID can import data from ResearcherID. It is part of our vision to empower researchers to choose an identity provider they trust or participate with their own Web identifier, which is globally recognisable and unique.

Provenance and accountability of information: Trust and confidence in data and results can be fostered by enabling reproducibility of experiments required to validate, and demonstrating a coherent explanation of the derivation of conclusions, and the agents responsible; however this tends to be a difficult challenge under current practices. Capturing scientific processes, workflows and data origins in a machine-readable way, and exposing Research Objects [10] will further improve the utility of the scholarly contributions.

Persistence and long term preservation: Research results must be made available in such a way that access to it is reliable and consistent over time, and irrespective of place. Studies show that significant number of referenced Web resources are not preserved or are at risk of disappearing [11]. Broken links, missing data, authors who move institution, organisations which close or merge or rename, and regimes which censor can all hamper access to and reuse of knowledge. A Linked Research ecosystem strives for persistent, long-term access to information as a technical and social norm by incorporating repositories in the preservation process.

Commentary and feedback: The academic peer-review process has been used to judge whether work makes contributions to the field. Studies are beginning to show the unreliability and elitist nature of this process [13, 14] and thus we call for transparency and broader participation in research discourse. It should be possible and expected for anyone to review academic work, and for this review to be attributed and recognised as a contribution of the reviewer in its own right. Moreover, research has shown that transparency in the peer-review process may be an indicator of the quality of peer-review [15].

Discovery and reuse

Human and machine-readability: Scholarly content, mostly published in binary form (e.g., PDF, Word), is currently not machine-readable to the extent that automatic post-processing is possible. Desirable modes of post-processing include sorting, aggregation and more accessible display of information, to improve the ease of use by humans. Many aggregation and indexing systems require manual input of metadata (or careful scraping by proprietary software), and authors undertake this labour, often repeatedly across different systems, for the pay-off of improving findability of their work. This labour can be avoided by generating metadata from article content and publishing it according to a standard syntax and semantics.

Integration of rich semantics: Within and around the prose, scholarly communication comprises rich semantic structures:

- the structure of the content, e.g. in sections, examples, definitions, theorems, notations;

- the argumentation, e.g. premises, assumptions, deductions, conclusions;

- facts and relationships between entities expressed in sentences;

- all kinds of data (examples, experiment results).

The Joint Declaration of Data Citation Principles [16], endorsed by over 100 organisations, explicitly calls for data to be treated the same as papers in the scholarly ecosystem, and for machine readability of the data. Similarly, the FAIR Data principles for findable, accessible, interoperable and reusable data encourage the semantically interoperable reuse of data.

Making this level of semantic structure available to machines for querying (and ultimately automated reasoning) facilitates development of intelligent support services for scholars, such as recommendation, search and comparison for related work.

Globally unique identification of entities: Our premise: Any resource of significance should be given a URI

[17] and The global scope of URIs promotes large-scale "network effects": the value of an identifier increases the more it is used consistently

[18]

A research document contains references to things, from abstract concepts to real-world objects; anything which may be referenced from a publication (people, methods, diseases, species, organisations, and so on). For scholars it must be possible to unambiguously indicate the subjects of discussion by creating their own identifiers for these entities or by reusing existing identifiers coined by others. This is (arguably) a more accessible way to make associations between articles and data referring to the same entities than alternative natural language processing based techniques. For instance, the progressive adoption of the Digital Object Identifier (DOI) or HTTP URIs for things, along with Nanopublications [19], makes it possible to declare and represent scientific knowledge claims that are globally identifiable on the Web [10, 20]. Such initiatives for citation of research artefacts beyond the document are a strong step towards this area of Linked Research.

Integration of data: In many cases some form of structured data is a direct subject of scholarly communication. In social sciences, for example, statistical data plays a key role as ground truth for validating or falsifying theories. Similarly, in engineering and natural sciences, measurement or observation data is of crucial importance. In the life sciences, statistics, anonymised patient data as well as taxonomic and ontological data can be central. Ideally, such data is directly integrated into the digital representation of a scholarly article in both human- and machine-readable forms. This permits both live updates to the article (including charts and figures) as new data emerges with no extra effort to the authors, and the ability for closer exploration of the data for better understanding by consumers.

User experience and tooling

Feedback and interactions: When others interact with published work, for example to cite, comment, review, share, recommend or annotate, the original authors should be notified and able to view and react to any new content produced in response to their work. Conversations and commentary may be stored across different systems, however there must be a mechanism for aggregating and displaying scientific discourse around the subject resources to benefit authors and consumers alike.

Support for different views: Content is consumed in a wide variety of formats online and offline, on devices of different sizes and capabilities, and with different interaction techniques (touch screens, speech input/output). Scholarly communication should exploit these different media, presentation and interaction techniques to allow scholarly content to be consumed in different situations [21]. The content should adapt to the available presentation capabilities, and alter its appearance according to some combination of the author's and consumer's preferences.

Adaptation to audiences: Depending on the audience, presentation of scholarly communication should happen on different levels of granularity. For experts in the field, explanations can be shorter, examples are not required and only few illustrations are needed. For newcomers on the other hand, more detailed explanations, illustrations and examples are helpful. Ideally, scholarly content can adapt to the audience. This should happen from a single source of content to minimise the burden on the author, where content elements are marked in such a way the adaptation to the audience can be performed largely automatically.

Integration of interactive content: Where possible, scholarly content should provide dynamic and interactive content. Examples include:

- executable software source code snippets, which demonstrate algorithms, queries, workflows etc. ([22] calls for these to be considered as a critical research output).

- interactive data visualisations with different diagram types, which allow users to zoom in, filter etc.

- small games or self assessment tests, which allow readers to interact with the content in a playful way or to test their comprehension of the knowledge

- widgets or applications, which provide interactive domain specific interactive content (e.g. exploring a large phenotype taxonomy)

Support for multimedia: Multimedia content such as videos, audio, and 3D simulations can dramatically improve the comprehension of scholarly content compared to the traditional static 2D illustrations currently found in papers. In particular, fields dealing a lot with multimedia data like engineering, arts, audio and video analysis, and medicine, would benefit.

Impact metrics: As the volume of scholastic contributions increase, investigators rely on services and filters to choose the most relevant and significant sources. What constitutes relevant and significant may be at the discretion of the service in use, and can be diverse. For instance, traditional author-level metrics while widely employed, check only the bibliographic impact. The rise and adoption of altmetrics as an alternative, aims to incorporate a wide range of information on scholarly contributions in order to derive more immediate and contextually relevant metrics e.g., webometrics [23]. Based on this, reward structures can then be re-engineered if reuse or repurposing of all scholarly or other contributions (e.g., data, methods, social activities and engagement, as well as non-technical implications of science) are taken into account. The availability of machine-readable altmetrics is well aligned to further facilitate Linked Research.

Concepts

Having defined requirements for the problem space of scholarly communication as a whole based on observations and existing work, we now consider how each topic area fits into the overall scholarly communication workflow, and indicate which of the requirements are pertinent for each stage of the process of scholarly communication. We describe expectations for each stage of the process, and propose technical solutions and methods aiming at meeting the related requirements. The Linked Research concepts and how they relate to one another is shown in Figure 1, and their relation to the requirements, technologies and stakeholders are visualised in Figure 2.

Our primary approach is to reuse existing technologies, techniques and specifications, combined in novel ways to meet the needs we have identified. We focus on the Web as a publishing platform for distribution and consumption and content and data in a decentralised way.

Research publishing

The Linked Research initiative encourages researchers to work transparently, publish early and often and without asking for permission. The Web provides the perfect platform for this. Ideally authors should acquire their own domain name and Web hosting; we acknowledge that this is not always possible or practical, though many institutions provide basic Web hosting and a URL space for all of their staff and students. Hence, employing DOI, PURL, w3id, or alike are possible ways to have some level of permanence for the information.

Publishing work online in static HTML allows wide accessibility for consumers and for added value services. Using CSS and JavaScript makes it possible to refine, adapt or even provide alternative views on a research document according to the consumer’s needs or viewing context.

Distributing work with URLs allows sharing equally between academics and specialists, and interested citizens. Linking between articles enhances discoverability, and coupling this with social notifications (ActivityStreams 2.0 is an appropriate emerging W3C standard which would enable interoperability with other social systems) allows authors to track citations, the reach of their work, and receive and display responses and feedback.

It is important to include licensing information with published work in order to promote fair use and remixing for content creators. We do not presume to dictate the most appropriate licenses for academic work to be released under, however it is worth emphasising that establishing common practices around open licenses such as those from the Creative Commons family is important to encourage a culture of openness and reuse, which, as we previously discussed, is important for a healthy scientific progress.

Calls for Contributions

Calls for contributions to journals and conferences are usually published online, and an increasing number of venues are accepting HTML submissions e.g., LDOW, ISWC, SemStats. A step further to encourage ownership and control over research work is to accept submission by URL, whereby the authors submit notifications of a ‘reply’ to a call.

While most calls for contributions are available in plain and simple HTML, they are typically not semantically enriched. Making their semantics explicit and then publishing them in compliance with the Linked Data design principles would improve their interlinkability with other knowledge graphs such as research articles or social feedback. Composing the call with additional structured data, for instance with concepts from controlled taxonomies (e.g., SKOS, DBpedia), topical requirements, and a list of contributors for peer-reviewing, enables better mining of such data. It can be purposed towards discovery calls suitable for a paper draft (i.e. venue recommendation), relating one call with similar calls, as well as watching the evolution of topical trends in research communities [25].

Peer-reviews and Interactions

In a Linked Research community, reviewers are able to see replies to a call they have, or have been, subscribed to, and in turn leave their reviews as replies to the submitted articles. Rather than centralistic submission and review management systems such as EasyChair, we employ workflows for ‘sharing’ that people are nowadays used to from social media. As peer-reviews are valuable contributions to the academic ecosystem, we encourage reviewers to publish their reviews in a Web space that they control (or trust), for the benefit of the community, in just the same manner as research articles themselves are published. With the optional adoption of attributed peer-reviews, responses can be rigorous and objective, while retaining transparency and identity of the reviewers. These open reviews are also available for the community to discuss and build on.

Proceedings

Editors and committees who have issued calls for contributions are able to take into account reviews and discussion on work they have been notified about, and select the articles to aggregate and formally endorse them as part of their journal or event proceedings. The same Web-based tooling which allowed authoring of articles, calls and reviews and notifications of responses in the first place can be purposed to automatically assemble and re-publish the chosen work as a collection, which can in turn be shared and responded to.

Persistence and Preservation

Ensuring the persistence of scientific results through time is of fundamental importance: readers should be able to access articles published in the past, authors should be able to point to articles and the statements within with confidence that they will be accessible in the future. As of today, archiving and preserving scientific documents are commonly considered the responsibilities of publishers. In the context of Linked Research, where decentralised authoring and publishing is promoted, responsibilities are to be re-evaluated: authors can publish their results online at a service of their choice, such as their own website. However, persisting academic publishing on long-term on the web has two main challenges which may be difficult for individuals to solve when hosting content themselves: 1) availability on the Internet (keeping the document/data server available, maintaining it, and defending it from attacks), and 2) presence on the Web; namely, maintaining a URL that points to the document/data and that does not change. Long-term obstacles to this include URL expiration, revocation (in case of someone taking legal action) and censorship [26].

We already see many efforts at centralised archives - Internet Archive, Archive-It, arXiv, CLOCKSS, Zenodo - however these are each a single point of failure for long-term persistence. In the case of self-archiving, the author is responsible for maintaining their service and domain name. This strategy clearly has non-trivial disadvantages: technical expertise is required, there are maintenance costs, and content may disappear if the author loses the ability to keep this up. In the case of third-party archival, although the responsibilities are delegated a service, this may cease to exist long term, or may act against the interest of the user.

An architecture in which documents are replicated on multiple servers can work towards solving these problems. If the URL is not reachable, the reader should be able to get a copy the content from elsewhere. Lots of Copies Keep Stuff Safe

is the idea behind LOCKSS, a project that aims to create a network of libraries and publishers that replicate scientific articles amongst the others [27], based on this idea there is other work that uses existing distributed networks such as BitTorrent [28].

When content is distributed across a decentralised network, documents must have pointers that the network agrees upon. This can be simply done by naming documents via their hashes, as in the InterPlanetary File System (IPFS). This also allows proof that the file hasn't been altered by nodes in the network, since verification would mean re-hashing the file. Recent works show how we can use Trusty URIs to employ hashing techniques whilst remaining compatible on the Web [29].

Ultimately in a Linked Research ecosystem, the role of content creators is also to distribute the content, and a role of public institutions in supporting preservation is to provide reliable hosting, addressing and backup in a decentralised manner. We envision a two-way system. Organisations such as libraries and universities can actively create collections by selecting, sorting and storing published articles. Additionally, authors can submit work themselves to archives, for either consideration by curators or automatic inclusion for backup. Currently in order to do this scholars submit their work manually into different systems, which can result in inconsistent or infrequent updates. To improve on this, the same open Web protocols as described for calls and proceedings can be used to automatically notify chosen archives whenever a new piece of work or dataset is ready.

Profiles

Just as authors can choose domains they trust to identify their work, they can also create URIs to identify themselves. By connecting this identifier to all of their publications, results and impact of the work they are involved with can be traced directly back to them. Researchers can build a profile which automatically includes their published work and their feedback on the work of others.

Currently, many academics choose to create professional profiles on centralised systems such as ImpactStory, Google Scholar, ResearchGate, Zenodo or Academia.edu. Such systems are poorly integrated with each other, and users frequently must enter their details repeatedly or choose one system and miss out on potentially connecting with members of others. Similarly from within one system, users cannot see the activities of users in other systems, even though many of the types of social activities afforded by the systems (reading or recommending a paper for example) are the same.

User Stories

We describe an acid test so that systems can be evaluated against the requirements of Linked Research previously outlined. The evaluation is intended to verify the openness, accessibility, decentralisation, interoperability of approaches in scholarly communication, and takes the form of a series of user stories which cover the full spectrum of scholarly communication and which must be feasible to carry out with the proposed system. This test does not mandate a specific technology, therefore the challenge can be met by different solutions. It is intended to test the design philosophies so that different approaches may be closer to passing the independent invention test.

This acid test is extended from Enabling Accessible Knowledge Acid Test [30] to encompass more aspects of the academic workflow, beyond authoring and publishing research articles. The acid test constitutes assumptions about proposed solutions and challenges that proposed solutions must meet one or more of.

- Assumptions

-

- All interactions conform with open standards, with 1) no dependency on proprietary APIs, protocols, or formats, and 2) no commercial dependency or priori relationship between the groups using the workflows and tools involved.

- Any mechanisms are available through at least two different interoperable tool stacks.

- Information and interactions are available for free and open access with suitable licensing and attribution for retrieval and reuse.

- Information is both human and machine-readable.

- All interactions are possible without prior out-of-band knowledge of the user’s environment or configuration.

- Challenges

-

- Alexander makes his article available on the Web with the research objects available at fine granularity, e.g., variables of a hypothesis.

- Beverly references and discusses Alexander’s research objects from her own research article, e.g., an argument against a methodological step.

- Carol annotates Beverly’s argument on Alexander’s work by suggesting that it was misinterpreted, and stores the note publicly at her own personal content store.

- Darmok and Eve write reviews for Beverly’s article and store them at their preferred locations respectively; Beverly is notified about the reviews.

- Frank publicly announces a call for contributions for an academic conference. He specifies the scope of the call and desired qualities of the submissions.

- Guinan notices Frank’s call for contributions to be suitable for her research article, and submits a link to her work. In her article, Guinan indicates that it was a reply to the call.

- Frank assigns Herman, Inigo, and Jean-Luc to peer-review Guinan’s work. Herman and Inigo both write their reviews so that only Frank and Guinan may read them; meanwhile Jean-Luc makes his review public.

- Keiko is assisting Frank, and compiles a list of articles which meet the requirements and standards of Frank’s call for contributions based on reviews and public feedback. She sends alerts to various institutions that the proceedings are ready to be archived.

- Liz manages scholarly archiving at her institution; she retrieves and catalogues the articles which were mentioned for library indexing.

- Marshall is a PhD student; he uses the interactive components in Beverly’s article by changing the parameters and rerunning an experiment and decides to expand on this for his own work.

- Nelson reads Alexander’s article from his handheld device, and prints a single page summary.

- Ophelia notices the new index of articles in response to Frank’s call and selects the ones she is interested in for her personal collection of potential references.

- Paris sees the peer-reviews about Guinan’s work and proceeds to discover further information about the qualifications and experience of the reviewers.

- At Frank’s conference, Q listens to the presentations from the authors and makes personal notes about the work; he occasionally makes his observations visible to the authors or rest of the conference audience.

- Rajiya aggregates all of the scholarly communication available in the last 5 years, and builds a visualisation to analyze research gaps. She then forecasts emerging fields.

- Sahrahsahe builds a profile of her research lab, and runs a comparative analysis to check which funding opportunities that are applicable with good chances to obtain the grants.

- Tabitha is interested in identifying research articles that generate controversial social activity, so she runs a filter for long running discussions with varying social reactions in a community query service.

From these challenges, we see how the requirements and concepts laid out previously fit into various stages of academic workflow. Solutions which meet one or more of these challenges, according to the assumptions, can be considered conformant with Linked Research principles.

Realisation

In this section we describe our own implementations, which aim at fulfilling some of the requirements stated previously. We pay particular attention to rich, semantic authoring, and decentralised publishing of both content, data and feedback. We describe the technologies we used to meet each requirement, and how we combined certain technologies and approaches in novel ways. We conclude with a brief discussion of requirements yet to be met and how we plan to go about tackling these.

Peer review and interactions

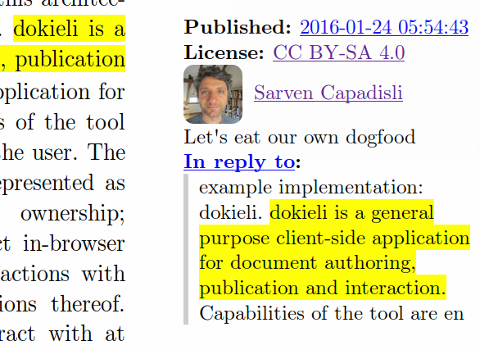

We support the rights of authors to own their data, and store and publish it where they feel most comfortable. This includes the social interactions and feedback users make around existing publications. Rather than centralising these interactions around the subject document, we took the decision to default to decentralisation of all content by allowing users to authenticate with their personal data space, and choose the location for their interactions at the point of making them. This gives rise to the need for a mechanism to notify the original author that their document has received some interaction. We achieve this by allowing document authors to specify an inbox for either their article as a whole, or any subsection with its own URI using the Solid inbox predicate. Inboxes are containers in a data space which may be appended to by anyone, and do not need to be on the same server as the document itself; if one article has multiple inboxes they can be distributed across as many data spaces as is convenient for the author(s). When an annotation is made, dokieli follows the appropriate inbox link and writes a notification there (see figure 3 for this process and listing 1 for notification contents). When the document is loaded, links are followed to all inboxes in order to retrieve interactions there have been notifications about, so that these can be displayed along with the document (figure 4 and figure 5).

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .@prefix schema: <https://schema.org/> .@prefix solid: <http://www.w3.org/ns/solid/terms#> .@prefix as: <https://www.w3.org/ns/activitystreams#> .@prefix oa: <http://www.w3.org/ns/oa#> .@prefix c: <https://creativecommons.org/licenses/by/4.0/> .<> a as:Announce ;as:object <http://example.net/foo/abc123> ;as:target <http://example.org/article#conclusions> ;as:updated "2016-01-24T00:00:00Z"^^xsd:dateTime ;as:actor <https://csarven.ca/#i> ;schema:license c: .

An author can also opt to allow anonymous interactions with their documents by pointing to a publicly writeable storage location in their own space, and store interactions on behalf of their audience. Conversely, an author who does not want to display interactions on their article can omit the inbox, but readers can still save notes on an article for their own use, using the same mechanism.

A dokieli research article can contain a relationship e.g., as:inReplyTo, to the call for contributions. That is, every scholarly article can be written in context of the conferences’ or journals’ call for contributions This current article on Linked Research is a reply to ACM Hypertext 2016 Calls for Contributions, and contains an RDF statement as follows:

@prefix sioc: <http://rdfs.org/sioc/ns#> .<https://csarven.ca/linked-research-scholarly-communication>as:inReplyTo <http://ht.acm.org/ht2016/calls> .

SemStats Call for Contributions

SemStats is a workshop that explores and strengthens the relationship between the Semantic Web and statistical communities. We have published all material on the site for the years 2013, 2014, and 2015 in HTML+RDFa using dokieli’s tooling. It contains interlinks like:

- The SemStats 2013 workshop is a

as:inReplyToto ISWC 2013 Call for Workshops - The SemStats 2013 workshop also

schema:hasPartSemStats 2013 Call for Papers - SemStats 2013 Call for Papers then contains statements like: description, topic of interest, motivation, keywords, event data, related links, organising committee and affiliations.

SemStats CEUR Proceedings

In response to the SemStats 2013–2015 calls for papers, several papers have been submitted to the workshop. Those that were accepted for publication became part of the proceedings. Still, if one such paper had previously been published at its own URI, the proceedings version points there. We have published the SemStats proceedings at CEUR-WS.org (see, e.g., http://ceur-ws.org/Vol-1549/) and at the same time introduced a modernised version of the CEUR-WS.org proceedings template based on dokieli’s HTML and CSS (but without JavaScript, which is not permitted by the CEUR-WS.org policy). Figure 6 demonstrates the interlinks between the peer-reviewed research article; Linked Statistical Data Analysis, SemStats 2013 call for contributions, ISWC 2013 call for workshops, and the proceedings of the SemStats workshop at CEUR-WS.org.

@prefix sioc: <http://rdfs.org/sioc/ns#> .@prefix schema: <https://schema.org/> .@prefix bibo: <http://purl.org/ontology/bibo/> .<http://semstats.org/2013/>as:inReplyTo <http://iswc2013.semanticweb.org/content/call-workshops.html> ;schema:hasPart <http://semstats.org/2013/call-for-papers> .<https://csarven.ca/linked-statistical-data-analysis>as:inReplyTo <http://semstats.org/2013/call-for-papers> ;bibo:citedBy <http://ceur-ws.org/Vol-1549/> .<http://ceur-ws.org/Vol-1549/>schema:hasPart <http://ceur-ws.org/Vol-1549/#article-06> .<http://ceur-ws.org/Vol-1549/#article-06>bibo:uri <https://csarven.ca/linked-statistical-data-analysis> .

Ongoing and Future Work

There remains a number of features to add to our implementation to meet more of the requirements of the acid test.

Archiving and preservation: Organisations (or indeed individuals) who wish to make their resources available for archiving work can advertise this fact through linking to an inbox where requests to archive can be sent, optionally along with criteria (human- and machine-readable) which submitted material must meet. Requesting inclusion in an archive involves the same underlying mechanism as any kind of notification to an inbox (replying to a call, creating an annotation), though there are user interface optimisations we can make to streamline the process. Similarly for the managers of an archival collections, the technical mechanism for duplicating a document and creating a new URI exist in current ‘Save As’ functionality, however we can automate this for submissions to archives, whilst generating new semantic relations between the original documents and archival copies such as sameAs or derivedFrom.

Collections: Personal collections of work are similar to archives, proceedings and journals, except that one user is adding third-party documents to a collection over which they have control, so there is no request/accept process for something to be included. This is therefore similar to bookmarking, and it is likely that a reference to the original rather than a direct copy will be stored in the collection owner’s space. dokieli functionality for managing collections of all kinds will also facilitate these kinds of personal collections, including the option to send a notification to the original author to let them know their work has been bookmarked. A particular use for this is for when a collection owner is writing their own academic article, and is able to auto-populate references and citations from their collections.

Profiles: An extension to dokieli which allows authors to pre-fill their affiliations and contact details permits automatic population of this data in new articles. Additionally, dokieli can automatically update an academic profile at the author’s own domain whenever new publications are authored or feedback left on the work of others.

Notifications and feedback: We do not currently have good tooling for management of notifications and feedback on articles, particularly if feedback is able to be of different types (e.g. suggestions, questions, disagreements, notes) that maybe subsequently resolved by article authors. This may be in the form of an extension or separate, generalisable, application outside of the dokieli core.

Versioning: Much research is evolving, ongoing work, and we require a solution for better management and identification of snapshots of articles; in particular to associate feedback with a specific version of work which may be subsequently addressed, or to show which iteration of an article was accepted to a conference or journal.

Conclusions

In this article, we have described requirements for exploiting the possibilities of digitisation and the Web for scholarly communication. The vision of Linked Research comprises concepts which can be realised by meeting these requirements, and we propose technical solutions for doing so. We demonstrate the feasibility of these proposals with a prototype implementation that covers several of the Linked Research concepts, and compare this coverage with existing tooling. We used this prototype, dokieli, for drafting and publishing this article.

Linked Research meets the criteria set forth in The Five Stars of Online Journal Articles [33] and we see this work as the first step on a larger research and development agenda. We envision an ecosystem of Linked Research compatible implementations and interfaces to existing systems (conference and journal management, editorial systems, open access repositories etc.). Ultimately, we hope that such developments will bring about a revolution in how scholarly knowledge is generated, published, shared and consumed.

Acknowledgements

The motivation and work on Linked Research is inspired by Marshall McLuhan, Theodor Holm Nelson, James Burke, and Tim Berners-Lee.

Special thanks to many colleagues whom helped one way or another during the course of this work (not implying any endorsement); in no particular order: Richard Cyganiak, Kingsley Idehen, Jodi Schneider, Paul Groth, Stian Soiland-Reyes, Raphaël Troncy, Anchalee Panigabutra-Roberts, as well as colleagues at MIT/W3C.

This research was supported in part by Qatar Computing Research Institute, HBKU through the Crosscloud project.

References

- Borgman, C.: Scholarship in the Digital Age: Information, Infrastructure, and the Internet, 2007, ISBN 9780262026192, https://mitpress.mit.edu/books/scholarship-digital-age

- Berners-Lee, T.: W3C 1998, Webizing existing systems, https://www.w3.org/DesignIssues/Webize.html

- Procter, R., Williams, R., Steward, J., Poschen, M., Snee, H., Voss, A., Asgari-Targhi, M.: Adoption and use of Web 2.0 in scholarly communications. Philosophical Transactions of the Royal Society of London A: Mathematical, Physical and Engineering Sciences 2010(368):4039-4056, DOI: 10.1098/rsta.2010.0155, http://rsta.royalsocietypublishing.org/content/368/1926/4039

- Licia, C., Cassella, M.: Scholarship 2.0: analyzing scholars’ use of Web 2.0 tools in research and teaching activity. Liber Quarterly 23.2 (2013): 110-133, https://www.liberquarterly.eu/articles/10.18352/lq.8108/

- Neylon, C., Wu, S: Open Science: tools, approaches, and implications. Pac Symp Biocomput. 2009:540-4, http://psb.stanford.edu/psb-online/proceedings/psb09/workshop-opensci.pdf

- Piwowar, H. A., Day, R. S., Fridsma, D. B.: Sharing Detailed Research Data Is Associated with Increased Citation Rate, PLoS ONE, 2007, DOI: 10.1371/journal.pone.0000308, http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0000308

- Suber, P., Brown, P. O., Cabell, D., Chakravarti, A., Cohen, B., Delamothe, T., Eisen, M., Grivell, L., Guédon, J-C., Hawley, R. S., Johnson, R. K., Kirschner, M. W., Lipman, D., Lutzker, A. P., Marincola, E., Roberts, R. J., Rubin, G. M., Schloegl, R., Siegel, V., So, A. D., Varmus, H. E., Velterop, J., Walport, M. J., Watson, L.: Bethesda Statement on Open Access Publishing, 2003, https://dash.harvard.edu/bitstream/handle/1/4725199/suber_bethesda.htm

- Ensor, P.: The Functional Silo Syndrome, AME Target (1988):16, http://www.ame.org/sites/default/files/documents/88q1a3.pdf

- Berners-Lee, T.: Socially Aware Cloud Storage, W3C, 2009, https://www.w3.org/DesignIssues/CloudStorage.html

- Bechhofer, S., Buchan, I., De Roure, D., Missier, P., Ainsworth, J., Bhagat, J., Couch, P., Cruickshank, D., Delderfield, M., Dunlop, I., Gamble, M., Michaelides, D., Owen, S., Newman, D., Sufi, S., Goble, C.: Why linked data is not enough for scientists. Future Gener. Comput. Syst. 29, 2 (2013), 599-611, http://dx.doi.org/10.1016/j.future.2011.08.004

- Klein M., Van de Sompel, H., Sanderson, R., Shankar, H., Balakireva, L., Zhou, K., Tobin, R.: Scholarly Context Not Found: One in Five Articles Suffers from Reference Rot, PLoS ONE, 2014, DOI: 10.1371/journal.pone.0115253, http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0115253

- Work, S., Haustein, S., Bowman, T. D., Larivière, V.: Social Media in Scholarly Communication, Canada Research Chair on the Transformations of Scholarly Communication, 2015, http://crctcs.openum.ca/files/sites/60/2015/12/SSHRC_SocialMediainScholarlyCommunication.pdf

- Kuhn, T. S.: The Structure of Scientific Revolutions, University of Chicago Press, 1962, ISBN 9780226458113

- Wagner, W., Steinzor, R.: Rescuing Science from Politics: Regulation and the Distortion of Scientific Research, Cambridge University Press, 2006 p. 224, ISBN 9780521855204

- Wicherts, J. M.: Peer Review Quality and Transparency of the Peer-Review Process in Open Access and Subscription Journals, 2016, DOI: 10.1371/journal.pone.0147913, http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0147913

- Data Citation Synthesis Group: Joint Declaration of Data Citation Principles. Martone M. (ed.): FORCE11, https://www.force11.org/datacitation

- Berners-Lee, T.: Universal Resource Identifiers -- Axioms of Web Architecture, W3C, 1996, https://www.w3.org/DesignIssues/Axioms.html#Universality2

- Jacobs, I., Walsh, N., W3C Technical Architecture Group: Architecture of the World Wide Web, Volume One, W3C, 2004, https://www.w3.org/TR/webarch/#identification

- Mons, B., Velterop, J.: Nano-Publication in the e-science era, Semantic Web Applications in Scientific Discourse, 2009, http://ceur-ws.org/Vol-523/Mons.pdf

- de Waard, A., Buckingham Shum, S., Carusi, A., Park, J., Samwald, M. and Sándor, Á.: Hypotheses, Evidence and Relationships: The HypER Approach for Representing Scientific Knowledge Claims, Semantic Web Applications in Scientific Discourse, 2009, http://ceur-ws.org/Vol-523/

- Capadisli, S., Auer, S., Riedl, R.: This ‘Paper’ is a Demo, ESWC Satellite Events (2015), https://csarven.ca/this-paper-is-a-demo

- Ahalt, S., Carsey, T., Couch, A., Hooper, R., Ibanez, L., Idaszak, R., Jones, M. B., Lin, J., Robinson, E.: NSF Workshop on Supporting Scientific Discovery Through Norms and Practices for Software and Data Citation and Attribution. Technical Report. National Science Foundation, USA, 2015, http://dl.acm.org/citation.cfm?id=2795624

- Groth, P., Gurney, T.: Studying Scientific Discourse on the Web Using Bibliometrics: A Chemistry Blogging Case Study, Proceedings of the WebSci10: Extending the Frontiers of Society On-Line, 2010, http://journal.webscience.org/308

- Library Linked Data in the Cloud: OCLC’s Experiments with New Models of Resource Description. Carol Jean Godby, Shenghui Wang, and Jeffrey K. Mixter Synthesis Lectures on the Semantic Web: Theory and Technology 2015 5:2, 1-154, http://www.worldcat.org/oclc/90981101

- Osborne, F., Scavo, G., Motta, E.: Identifying diachronic topic-based research communities by clustering shared research trajectories, Research Track, ESWC (2014), http://oro.open.ac.uk/39666/3/ESWC2014_CR

- Berners-Lee, T.: Cool URIs don't change, W3C (1998), https://www.w3.org/Provider/Style/URI.html

- Rosenthal, D. S. H.: What Could Possibly Go Wrong? BIBLIOTHEK – Forschung und Praxis 2015; 39(2): 180–188, DOI 10.1515/bfp-2015-0022, http://www.lockss.org/locksswp/wp-content/uploads/2015/06/bfp-2015-0022-1.pdf

- Markman, C., Zavras, C.: BitTorrent and Libraries: Cooperative Data Publishing, Management and Discovery, Volume 20, Number 3/4, 2014, doi:10.1045/march2014-markman, http://www.dlib.org/dlib/march14/markman/03markman.html

- Kuhn, T., Chichester, C., Krauthammer, M., Dumontier, M.: Publishing without Publishers: a Decentralized Approach to Dissemination, Retrieval, and Archiving of Data, ISWC 2015, http://iswc2015.semanticweb.org/sites/iswc2015.semanticweb.org/files/93660593.pdf

- Capadisli, S., Riedl, R., Auer, S.: Enabling Accessible Knowledge, CeDEM (2015), https://csarven.ca/enabling-accessible-knowledge

- Capadisli, S., Guy, A., Auer S., Berners-Lee, T.: dokieli: decentralised authoring, annotations and social notifications, 2016, https://csarven.ca/dokieli

- Peroni, S: The Semantic Publishing and Referencing Ontologies. In Semantic Web Technologies and Legal Scholarly Publishing: 121-193. Cham, Switzerland: Springer. http://dx.doi.org/10.1007/978-3-319-04777-5_5

- Shotton, D.: The Five Stars of Online Journal Articles, D-Lib Magazine (2012), http://www.dlib.org/dlib/january12/shotton/01shotton.html

Interactions

6 interactions

Jindřich Mynarz mentioned on

Jindřich Mynarz mentioned on

Myles Byrne replied on

Myles Byrne replied on

It is poignant to see comments to the effect of, 'great work, but until there is mass adoption, science publishing and funding are keeping us stuck in MSWord and PDF land'. Such comments could be cited within the paper itself as proof of its rationale. Career scientists invested in playing the NatureCellScience game may not feel a strong incentive to make immediate use of this work, for indeed, it is not instrumental to climbing the status ladders of legacy institutions. But such scientists also sound out-of-date, wedded to forms of collegiality that have grown increasingly ineffectual over the last decades, leading to the current crises in reproducibility, access, and career viability.

A mass shift in the social organisation of science and web publishing is obviously underway. What is the point of waiting for the shift to be over, when you can be part of it happening? Remember how science began: by pursuing the spirit of exploration and discovery against the established order, in the name of knowledge for all. Now, we're beginning again. Whether you're inside or outside the ivied walls of NatureCellScience, the collegial spirit of science lies in this direction.

Anonymous Reviewer replied on

In this paper, the authors propose a web-based framework for scholarly communication, addressing a significant number of the processes involved. For that purpose, they present an exhaustive list of requirements, and highlight how some of such requirements could be implemented using web-based technologies and techniques.

The paper, without any doubt, is interesting and well written. Nonetheless, in its current, I think it is not mature yet to be accepted as a long paper. Among other things, and more specifically:

- It lacks an analysis of limitations, difficulties and risks related to the implementation of some of the scholarly communication processes should be presented.

- It should present evidences (e.g., user interface screenshots, statistics about collected data and linked resources, evaluations, etc.) of the presumably implemented prototype.

I thus suggest the paper may be shortened and accepted as a short paper.

Anonymous Reviewer replied on

The paper addresses many important issues that concern the scholarly publication transition process, but the scope of the paper is too broad to be covered in a single paper. Due to this very broad scope, the following issues arise:

I. Most of the paper is dedicated to generalities (requirements) that are covered very sparsely (Impact metrics, reward systems, quality assurance) and little space is left for presenting the work done itself.

II. The detailed part that follows (starting from section 4: Realization), only addresses a fraction of the scope (Authoring and Publishing). Remaining issues are then listed as ongoing or future work (Archiving and Preservation, Collections, Profiles, Notifications, Feedback, and Versioning). This gives an overall impression that the work described is currently mostly in the stage of development and thus targeting a full paper publication is probably premature.

III. The paper presents several strong points that are unfortunately left on a very general level, concerning authoring and publishing of research papers or exploiting in full extent available web technology. At least a few examples of topics that could become more central in the paper (or, each of them could become a separate paper per se):

- How concretely front end Web technologies, as mentioned at the beginning of the section 3.1, contribute to the transition process of scholarly communication;

- How to implement a quality assurance process of scholarly publishing, mentioned as a requirement (section 2.3. Impact metrics an reward systems), measuring impact and implementing reward system, which is traditionally a major issue in the ongoing scholarly communication transition process.

- How to efficiently attach research data to papers is also a very interesting problem to tackle.

The authors should perhaps make a choice whether the goal is (1) to provide a general overview of the currently ongoing scholarly communication transition (something along the format as was for example published in [1]) and that would more comprehensively embrace the scope of this complex subject. Or, (2) to select one (or a few) issues that the authors consider as central to the transition process of scholarly communication and focus on these in much closer detail (e.g. exploiting fronted web technologies, measuring impact, reusing research data,..), or (3) focus on the description of a tool that helps advancing the scholarly communication transition which should be described in much closer detail pinpointing major contributions of this tool.

[1] Kriegeskorte, 2012 - An emerging consensus for open evaluation: 18 visions for the future of scientific publishing. In: Frontiers in Computational Neuroscience, 6:94.

Anonymous Reviewer replied on

The paper proposes a research agenda for linked research. The authors argue for an infrastructure in which all artifacts of research publications - CfPs, papers, proceedings, as well as micro-structure parts of publications (proofs, arguments, etc.) - are provided using the Linked Data paradigm. With the dokieli authoring application and an example for semantically enriched CEUR proceedings, the authors also present an example toolstack and implementation.

While the paper is quite extensive about the requirements for a Linked Research tool stack, in my opinion, the potential impact and advantages are a bit vague. For example, the authors state that each paper can be linked to the original call for papers, but it is not clear what benefit such a link brings. Adding a high-level vision about possible applications - e.g., improved scientionmetrics, semantic search for related works, etc. - would clearly improve the paper and justify the impact.

The authors claim that a strength of their approach is that tables and diagrams can be automatically updated, since they are linked to the underlying, possibly dynamic data (section 1), and that multiple copies of the same paper can be provided at different locations (sec. 2.1). On the other hand, versioning is only mentioned as an item for future work. Here, I would have appreciated a more thorough discussion of the possible impact of such design decisions. Being able to change a publication after its acceptance is a crucial paradigm shift in scientific publication and communication, and hence, a critical reflection of such a design decision should be added. A similar point holds for the idea of LOCKSS (3.3.), where versioning is crucial.

Along similar lines, 3.2.1 discusses the submission by URL instead of by submitting a paper. Such an approach would allow authors to change a paper during the reviewing process, which could totally flaw the entire reviewing process. Again, this is a paradigm shift w.r.t. current submission practices, which calls for a deeper discussion.

In section 3.3, it is stated that "the author is responsible for maintaining their service and domain name". This is used as a contrast to centralized services like arXiv. However, even personal pages usually not technically maintained by the authors, but by their organization (university, company, etc.), or hosted by an internet service provider. Thus, the comparison is a bit unfair.

Section 5 is embarassingly short. Furthermore, many aforementioned services (e.g., ResearchGate) are missing from the comparison.

In total, the paper misses a good motivation, the related works section is substandard, and the impact of ground-breaking design decisions should be discussed more thoroughly.

Minor remarks: - Fig. 1 should contain a cyclic arrow from research to research labeled "reference" - in 4.1, a figure (dokieli-edit-menu) is referenced which does not exist. Maybe the authors submitted a URL, and it has been added in the meantime? ;-)

Anonymous Reviewer replied on

This paper presents a framework for scholarly communication, and addresses a number of the processes involved. In general the opinion of the three reviewers is that the paper is well written and interesting. However there a number of serious comments:

- the scope of the paper is too broad

- not mature yet to be accepted as long paper

- it should present evidences

- discussion of related work/implementations is insufficient

For these reasons I advice to accept this paper as poster