Where is Web Science? From 404 to 200

- Identifier

- https://csarven.ca/web-science-from-404-to-200

- In Reply To

- Call for Linked Research

- Workshop on Web Observatories, Social Machines and Decentralisation

- Notifications Inbox

- inbox/

- Annotation Service

- annotation/

- Published

- Modified

- License

- CC BY 4.0

Abstract

Typical practice in scholarly communication of Web Science diverges from the broader vision of the Web. This article outlines the socio-technical problem space of Web-based academic engagement, and presents a vision of a paradigm shift led by Web Science. We propose a path towards adding Web Science research to the Web. 🙈 🙉 🙊

Keywords

Introduction

The ideas in this article are intended to serve as areas of investigation for the Web Science community to self-examine and pursue a socio-technical (r)evolution in scholarly communication. Contemporary scholarly communication is a complex social machine involving a global network of (in the broadest senses) teachers, learners, buyers and sellers. Centuries or more of embedded assumptions and culture around knowledge acquisition and sharing meet modern technologies and practices. Advancements in scholarly communication are not keeping pace with the advances in, and expectations that society has of, communication in general. We argue that Web Scientists have both the duty and ability to lead progress, but are hampered by a complex array of social and technical challenges. This article unpacks some of these challenges, describes the advancements that have been made so far, and proposes small steps we can take to move forwards.

Literate culture is visual and detached... the effect of the electric revolution is to create once more an involvement that is total.

— Marshall McLuhan.

The Web offers extensible interactions, creative participation, and social engagement. There is a natural human desire to connect with others, as well as to co-create. Given these opportunities, the fundamental question to ask here is: why should research communication in Web Science be limited to, evaluated by, and rewarded according to old-fashioned practices? The methods in question which are still used date back to the invention of the mechanical movable type printing press (circa 1450).

When I saw the computer, I said, ‘at last we can escape from the prison of paper’... what did the other people do, they imitated paper, which to me seems totally insane

— Ted Nelson.

The Web can be purposed for greater possibilities than that of print alone; we urgently need to address the question: what constitutes a contribution in Web Science? What do you think of the following equivalence: If a tree falls in a forest and no one is around to hear it, does it make a sound?

≡ If Web Science scholarly communication is inaccessible or unidentifiable, does it exist?

Local-scale changes in Web architectures and resources can lead to large-scale societal and technical effects.

— The Semantic Web Revisited (Nigel Shadbolt, Wendy Hall, Tim Berners-Lee)

This article is a reflection on the scholarly communication practices primarily from Web Science, Semantic Web, Linked Data, and related Information Science communities, referred to collectively as Web Science.

Problem space

We first outline some of the categories of challenges that remain to be addressed to facilitate Web-based scholarly communication:

- Universal Access

- Research outputs tend to end up being published at for-profit third-party services. These services have created an ecosystem that is closed in nature, restricting the acquisition of research results only to the privileged, dependent on location or connection with subscribing institutions. Thus publicly funded research ends up actually controlled by

gatekeepers

, in many ways contradictory to the fundamental values of the Web. Even in the case of initiatives like Open Access, individuals and institutions end up with high costs in electronic subscriptions, self-archiving, and with article processing charges. This contradicts even the heart of the Budapest Open Access Initiative (or even if we revisit Subversive Proposal). The for-profit models which also influence rights and licensing on the information breed access barriers to human knowledge even today. Hence the emergence of guerilla services like Sci-Hub which bypass publisher paywalls. - Centralisation

- Academic publications end up in information silos. There are constraints and penalties for moving the data or making it accessible by other means. With that, the canonical identifiers of research objects — the strings that are considered to be academically

citable

— and the resources retrievable via those identifiers, are subject to the terms of the host. The opportunity to build a true sense of data ownership is missing from the Web Science community; information is usually handed over to third-party services operating in isolation in comparison to the Web at large. - Open communication

- The common scientific communication form in this space is unidirectional — between the organising committee and the authors — as well as held mostly behind the scenes. The communication is neither held in public nor exposed even after the articles are made publicly available. The public is not particularly aware of the grounds on which an article was accepted, the feedback and concerns it received from peer-reviewers, or the revisions it received. This is useful information for carrying out open discussions in the spirit of science. Lastly, there is no way to identify the reliability or strength of reviewers beyond the trust the community places on organising committees.

- User experience

- Scholarly articles and their peer-reviews are predominantly geared for desktop and print-based consumption. Knowledge is expected to fit rigorously within two-dimensional space, absorbed in a linear-fashion, and is devoid of the interactive possibilities of the Web.

- Machine-readable data

- Recently there are directives in some regions to publish datasets in accessible, machine-readable open formats, and Web Science research should be pioneering these efforts. Although more and more researchers (from all disciplines) are publishing their datasets and results directly in some form, Web Scientists are missing the opportunity to use their expertise to lead in publishing machine-readable semantic representations of all kinds of data, including the contents of prose-style research 'papers'. Such data can be purposed toward (semi)automated discovery of arguments and hypotheses, tracking provenance of and relationships between ideas, and collective understanding of the enormous scale of research which is produced every year.

- Practice

- The absence of Web-based engagement in one’s own research work may lead to confined understanding of what works or is feasible to materialise. In other words, the community at large neglects to eat its own dogfood. This has lead to exploring solutions to problems which do not necessarily work well in practice. The lack of involvement in the complete process of capturing research data from the start, from writing, to peer-reviews, and collation and distribution of articles, has lead to designing research communication that is strictly non-involving or participatory. The opportunity to better collaborate with the other researchers, as well as effectively reuse their data is minimal. The effects of non-engagement have consequences in personal education, control and trust, and the enhancement of the field as whole.

Where these themes converge is that notable amounts of scholarly data is either inaccessible to the Web at large or unfit for the native Web stack, and consequently hinders the evolutionary process of Web Science and its study. The rest of this article narrows down on some key areas where these issues emerge and proposes tangible paths towards working more closely within the forward-looking A Framework for Web Science.

Escaping the prison of paper

Eating our own dogfood

What I cannot create I do not understand. — Richard Feynman

There are several examples of well-intentioned researchers presenting their findings and ideas on how to take scholarly communication as well as Web Science forward, yet the majority stop at actually demonstrating what they propose. To take one recent example, the article The FAIR Guiding Principles for scientific data management and stewardship with 53 authors (that is not a typo), hosted by nature.com (2016), points out a helpful guideline: F1. (meta)data are assigned a globally unique and persistent identifier

. To eat one’s own dogfood, we would have expected an identifier for that statement, however it does not exist. Naturally, one should ask: what is Findable, Accessible, Interoperable, Reusable (FAIR) about that statement? What is the measurable delta between this article and every other article (and there are many) making the exact same recommendation? If we were to try to reuse the guideline that is put forward in our study, how can we link to it without any ambiguity? Here is a working example from 1996 https://www.w3.org/DesignIssues/Axioms.html#Universality2 written by Tim Berners-Lee which states: Axiom 0a: Universality 2. Any resource of significance should be given a URI.

Why was not this earlier work reused or cited?

In a similar vein, conferences announce their calls for contributions, and require contributions to use desktop/paper-based electronic formats, e.g., ELPUB, ACM Hypertext, WWW, WebSci, ICWE. All of these calls are about sharing research output about the Web as two dimensional objects destined for print. None of them explore the full potential of native Web tools or technologies for knowledge dissemination, or encourage their research communities to do so. Reviews are held behind closed doors, reviewers are not publicly attributed or subject to question. These type of events even state that contributions which do not comply with certain constraints will be rejected at the door without review. To pick further on WWW (the World Wide Web conference), its contribution to the Festival of the Web, expects contributions to be in English

, font size no smaller than 9pt

, in PDF

, formatted for US Letter size

, occupy no more than eight pages

. McLuhan, Ted, TimBL, sorry, we really messed up our information superhighway. If we apply Tim’s Linked Open Data star scheme, it is debatable whether they would even receive a single ★ (star).

Lastly, the peer-reviews themselves tend to live behind centralised services like Easychair with no particular educational value to anyone outside of the restricted access, i.e., the public. A transparent peer-review process as well as the discussions around it can be seen as an indicator of peer-review quality.

All of these are lost opportunities for the community as a whole, where even small amounts of mindfulness and care on building towards a Web We Want can make a big difference. To counter our complaints, we now present the following as some positive examples of eating one’s own dogfood.

Sparqlines, SemStats, CEUR-WS

SemStats is a workshop series usually held at ISWC. Determined to push forward the vision of accessible and open semantics research, we have been welcoming HTML/RDF based contributions since 2013. The entire workshop site, including the calls themselves are in HTML+RDFa. This enables the possibility to make relations such as article is in reply to a call, as well as having the proceedings semantically related to both the article and the workshop. We can see these simple yet useful links among these documents with their accompanying data:

@prefix sioc: <http://rdfs.org/sioc/ns#> .@prefix schema: <https://schema.org/> .@prefix bibo: <http://purl.org/ontology/bibo/> .<http://semstats.org/2013/>as:inReplyTo <http://iswc2013.semanticweb.org/content/call-workshops.html> ;schema:hasPart <http://semstats.org/2013/call-for-papers> .<https://csarven.ca/linked-statistical-data-analysis>as:inReplyTo <http://semstats.org/2013/call-for-papers> ;bibo:citedBy <http://ceur-ws.org/Vol-1549/> .<http://ceur-ws.org/Vol-1549/>schema:hasPart <http://ceur-ws.org/Vol-1549/#article-06> .<http://ceur-ws.org/Vol-1549/#article-06>bibo:uri <https://csarven.ca/linked-statistical-data-analysis> .

Semantic Web journal

The online Semantic Web journal places a mandate for the authors to provide their peer-reviewed articles in LaTeX format in order to be fit for the third-party publisher’s workflow, as well as to disseminate the research knowledge about Semantic Web in PDF. However this is in addition to the article being available (free of charge) on their website and in some cases linked to their canonical URL. The highlight of the journal is its encouragement for open and transparent peer-review. Reviews are publicly accessible on the contributions at each phase of the publication process. Additionally, authors of the reviews have the option to be transparent about their identity, which plays an important role towards accountability as well as receiving well-deserved attribution for their time and expertise.

Semantic Web Dog Food and ScholarlyData

The Semantic Web Dog Food initiative was aimed at providing information on papers that were presented, people who attended, and other things that have to do with the main conferences and workshops in the area of Semantic Web research.

The initiative was successful and grounded itself in demonstrating convincing arguments towards contributing to the Semantic Web vision by providing a recipe for dogfooding. However, one limitation of its corpus on research articles was that it only consisted of metadata like article title, authors, abstract. There was no granular semantic information like hypothesis, arguments, results, methodology, and so on since the article source format was not accompanied with semantic relations.

Following this effort, the ScholarlyData project recently became the successor of Semantic Web Dog Food project, where it focused on refactoring the original dataset, as well as making improvements on the Semantic Web Conference Ontology. Nevertheless, the input data (from the articles, conferences, people and organisations) is still no better than its source formats. The dependency here is not on native Web formats but on others’ requirements and convenience. These initiatives can still have higher potential if the original data shifted from metadata to data.

Current initiatives

Some of the academic conferences in Web Science, e.g., ISWC, ESWC, EKAW, as well as multiple conferences and journals from non Computer Science disciplines, have shown interest in experimenting with the ideas on using non-print centric formats for the research contributions. While the observable progress is relatively slow, it is on the community’s radar. This is a social progress in contrast to the state of affairs circa 2012.

Most recently, venues at WWW2017: Linked Data on the Web pitched the initiative towards Pioneering the Linked Open Research Cloud

; and the Workshop on Web Observatories, Social Machines and Decentralisation takes a more holistic approach that is closely aligned with Web Science. The workshop on Enabling Decentralised Scholarly Communication at ESWC2017 is part of an ongoing Call for Linked Research. Contributors to these calls are explicitly requested to make the best use of what the Web offers (incorporating self-publication, archiving, interactions, social engagement), as well as to raise questions to further understand the challenges ahead and ways to pursue them. This ought to be the norm in Web Science scholarly communication regardless of the content of the research itself.

In summary, Web Scientists ought to pay it forward by experimenting with the technologies in their playground when they have the chance. In this day and age, a Web scientist who refuses, neglects, or is incapable of using native Web technologies and standards to capture and disseminate their own knowledge is a bit like a natural scientist insisting to use their own eyes when a microscope is made available to them. We should make best use of the media that are available to us.

Illusion of existence

Let’s have a look at what it means to exist in terms of scholarly communication.

While DOIs and ORCIDs are useful tools, they are centralised manifestations in context of the Web. On the technical end, information that is identified with a DOI scheme does not in and of itself equate to good quality. After all, anyone can have a DOI registry under their own domain. However in social terms, especially in scientific circles, they are perceived to have more weight than an HTTP URI. HTTP URIs and DOI strings are the same in being unique strings which can be recognised by certain systems. doi.org makes these identifiers resolveable via HTTP, but is extremely brittle, relies on redirection, and suffers from reference rot. The cultural value of DOIs can be observed in the tools and services which are built around only acknowledging or exchanging data which has a DOI. Services like CrossRef or DataCite for instance acknowledge https://doi.org/10.1007/978-3-319-25639-9_5 but do not acknowledge the canonical URI https://csarven.ca/this-paper-is-a-demo, which is accessible to anyone for free, accompanied with granular Linked Data, interactions, peer-reviews, and wider social engagement tooling. Regardless, the former URI is considered to be citable

and the latter is just a blog post according to most Web scientists (for those who do not feel like clicking the links, the article contents are the same). Even services like Open Citations (dependent on the other centralised services) only consider resources which essentially have have a DOI pattern.

Similarly, while I can more authoritatively provide rich semantic information about my own identity on the Web through https://csarven.ca/#i (and enabling a follow-your-nose type of exploration) than I ever could with http://orcid.org/0000-0002-0880-9125, the latter gets acknowledged by services which are essentially disjoint from the rest of the Web. Again, current centralised systems would acknowledge the ORCID URI but not what I have to say about myself that is under my control. I own/rent the domain csarven.ca, but have no control over those of orcid.org. Moreover, to ‘park’ a profile at the latter, I must agree to their terms of service. This is not particularly different than having a profile at Facebook, Twitter, or Google for instance. Heir Today, Gone Tomorrow.

There is the traditional approach where an article goes through an opaque peer-review process; complies with the technical requirements of what constitutes a paper

; and has the individual or their organisation paying the fees (to publish as well as to attend potential meetings), in addition to agreeing to the terms of the publisher. In this case, works are entitled to exist by means of centralised identifiers. If however this process is not met, the community does not necessarily consider what lays outside to be scientific knowledge. It goes without saying that publishing scientific knowledge on the Web, i.e., accessibly, and with different modes of engagement, is orthogonal to having a free and open, attributable scientific publication process. The requirements of publishers need not necessarily prevent us as individuals from publishing our work on our own websites. However, researchers are not occupying a vacuum, and contend with influences from many directions. These intertwined concerns make up the scholarly communication social machine, one part of which is that universities and libraries channel public funds for subscription fees so that researchers have read access to the articles, reinforcing the system. DOI and ORCID serve as top-down authoritarian constructs. Consequently, the type of data that can be fetched through these routes is fundamentally dependent on whatever the third-party publisher deems suitable for their own business and technical process.

Directing research focus

The particular areas of research focus in Web Science is generally guided by conference organisation and journal committees, with the influence of senior researchers and their team’s interests, as well as the funding that is tied to that research. While this approach is practical and accommodates the community’s short-term needs and activities, it is evidently based on human-centred decision making as opposed to leveraging data-driven analysis of the field.

To further examine this, we observe that the topics of interest for calls for contributions is a reflection on what worked and did not from earlier experiences usually through town-hall

discussions at conferences, as well as off-the-record discussions through different channels. This is valuable feedback from the community, however it could and should be accompanied by the possibility that new research challenges can be discovered by analysing directly data about what has already been studied. This would be a measurable observation which can feed back into the community, as well as providing actual data when it comes to requesting funding more legitimately.

Freedom to innovate

Web Science can serve itself better if the freedom to innovate around how to best present and distribute research results is continuously encouraged. In order for the community to evolve using its own framework, the attempts at reformulating its conventions should be carefully considered and all centrally or top-down imposed restrictions should be thoroughly critiqued.

If articles and reviews accommodate machine-readable data (like Linked Data), the potential for reuse is increased. For example, if research articles capture their problem statements, motivation hypothesis, arguments, workflow steps, methodology, design, results, evaluation, conclusions, future challenges, as well as all inline semantic citations (to name a few) where they are uniquely identified, related to other data, and discoverable, then specialised software can be used to verify the article’s well-formedness with respect to the domain. In essence, this materialises the possibility of articles being executable towards reproduction of computational results. Similarly, user interfaces can manifest environments where readers can rerun experiments or observe the effects of changing the input parameters of an algorithm. This provides an important affordance for a more involving environment for the user, improves learnability of material, and supersedes the passive mode of simply reading about a proposed approach.

Innovation can also be encouraged by shortening the length of the research cycle. There are benefits to releasing early-work to the scientific community. For instance, having an early timestamp on some research can provide a strong signal on the origins of ideas and solutions, as well as having the value of practicality of receiving initial feedback from the community and public. Today, even some of the Open Access journal models slow down the scientific process (even if released to the public within first 2 years) when research is not immediately accessible.

Illusion of quality

We can examine social behaviours around writing articles for particular conferences and journals. For the sake of this discussion, we can refrain from judging the quality of the peer-reviews themselves since rich and poor reviews appear everywhere. This is especially true since detecting bias even in accurate research findings can be challenging.

One groupthink is a fixation on equations like: the number of accepted peer-reviewed articles over total contributions to a conference as a signifier for the venue’s importance in terms of academic credit. Lower the percentile tends to socially imply that a given venue is challenging for scholars to leave their scholarly mark. Unfortunately, such social perceptions create artificial bubbles.

To better understand these acceptance rates, we must think again in terms of the holistic social machine of scholarly communication. First, the number of acceptances are not solely based on the value of the contents of contributions as useful research output, but rather dependent on a number of other factors. The contributions that are accepted are ultimately subject to 1) the availability and suitability of the research tracks in a given year (decided, as we already mentioned, by the instinct of senior academics or whims of funding bodies), and 2) the number of articles allotted per volume or proceeding that the chairs are able to take on. Conferences that are collaborating with third-party publishers are instructed to commit to an optimal or preferred number of pages (or other criteria like number of words) for the conference series. These requirements are driven, again as we have already mentioned, by the business needs of for-profit organisations.

If venues were not subject to the constraints mentioned above, what would the information space be for the scientific output? Such endeavour would bring researchers to aim for venues or tracks that are most appropriate for their research area, and keep their focus solely on sound and reproducible research, as opposed to making it in to a journal that is artificially prestigious and imposed on researchers by third-party services. Perhaps we should find other standards by which to judge the quality of our research output aggregators instead. Criteria could include: are we permitted to present our work in the way that best conveys its message? Is my contribution deftly and conveniently situated alongside related work for passers-by? Do I have enough control to issue updates, or to engage with reviewers in context?

Attributions and credit

The Web Science community, like in other fields of research, goes above and beyond investing time, energy, and expertise into peer-reviews. With a few exceptions, reviewers are usually not publicly attributed and credited for their contributions. While the quality of peer-reviews may always be subject to question, they are nevertheless critical to providing some form of verification of scientific output. Hence, they should be captured, preserved, and environments should be created for further social engagement. Peer-review should not be a one-off event, but an ongoing conversation or collaboration. This desire amongst researchers is demonstrated by emerging services which allow academics to engage in pre and post publication peer-reviews and comments publicly to foster verifiable reviews and to keep track of retractions.

While most research publications in Web Science have their focus on positive results, as well as potentially reusable output (applications), the volume tends to pale in comparison to the negative or inconclusive results obtained during the research process. It is hypothesised that to get more out of science, negative results should as well be made visible and credited as they also serve useful information for other researchers to rapidly advance the field. Hence, workshops like Negative or Inconclusive Results in Semantic Web should be integral to the main research tracks or other workshops.

Be the change: towards Linked Research

Linked Research is an initiative, a movement, and a manifesto. It is set out to socially and technically enable researchers to take full control, ownership, and responsibility of their knowledge, and have their contributions accessible to society at maximum capacity. Linked Research calls for dismantling the archaic and artificial barriers that get in the way of this. A call

is available for publishing and promoting open, accessible and reusable academic knowledge.

An acid test is proposed at Linked Research: An Approach for Scholarly Communication, which aims to evaluate systems openness, accessibility, decentralisation, interoperability in scholarly communication. This test does not mandate a specific technology, therefore the challenge can be met by different solutions. There are a set of proposed challenges for the community to check their systems against to see how well they can fulfill them, as part of the push towards building cooperating systems.

Framing our vocabulary

If we acknowledge an imminent new paradigm shift on the horizon, it is necessary to commit and exercise a new vocabulary in our academic endeavours. We should give importance to these new terms because they set our frame of mind, which sets the stage for our daily work. I would like to encourage new calls for contributions to welcome researchers to share their articles and supporting material on the Web:

- The archaic mental frame hinged on the idea that individuals submit their work, as in handing it over, dislocating themselves from what they have produced by giving up their future rights. There is no need to give up anything. It belongs to the creator, and they simply share it under their terms.

- s/submission/contribution

- A call by a venue does not merely get submissions of works. That is a misnomer. The process is much richer than that. Individuals make contributions to the field, pushing the boundaries of the collective understanding.

- s/paper/article

- The term paper is an obvious and dreadful artefact imposed by the pre-digital generation and archaic business models. Academics in Web Science tend to create articles (electronic documents if you will) that gets to reside somewhere on the Web, that can be discoverable and accessible.

Cooperation

Sooner or later we need a strategy to address questions like: What do we aim to accomplish? Who is trying to do it and for the benefit of whom? How do we know whether we are making progress and when we have succeeded?

Potential solutions to these questions and new challenges need to address the unspoken gap between researchers, academic and public institutions, as well as what ends up in the commons. It is not just about researchers publishing their work online, nor about conferences requesting semantically annotated contributions or operating with transparent peer-review. All of these things must move in combination. Here are some ideas that can be executed today:

- Researchers

- Researchers take full responsibility for the creation and publication of their work. This can be at any Webspace they put trust on or have sufficient authority over, e.g., personal site, institutions’ student and faculty pages. Making their work as Webby as possible e.g., linking their arguments and citations to others', interactive story-telling or experimentation possibilities for their readers. Researchers should take special care to ensure URI persistence and archiving of their work. See what your library or online archives (such as archive.org and archive.is) can do for you.

- Conferences and journals

- In person conferences and online journals should encourage and accept research publications which use native Web technologies. Support transparent and attributed peer-review process. Aggregation or proceedings of the events should be machine-friendly and archival measures should be taken in addition to that of researchers.

- Public institutions

- Given sufficient evidence of the Web Science community’s acknowledgement of research as well as its contributions (eg peer-reviews), academic institutions should officially acknowledge such work towards academic credit. Libraries should make the community’s output discoverable, catalogued, enriched, among many other things.

Conclusions

This article highlighted some of the significant areas in the practice of Web Science which should be the subject of scrutiny. We describe how scholarly communication functions as a complex social machine, with many aspects which influence each other. This conceptualisation of the space can help us to coordinate efforts in effecting change. Only by examining our current practices, as well as cherishing our curiosity we can evolve Web Science. One of the important outcomes of this is being more active participants for the Web We Want

.

The new generation of researchers will phase out older practices. The recent or current generation of academics are sticking to the status quo and adhering to demands of the third-party publishers’ requirements, or simply because they were brought up in their careers to strictly comply with desktop/print based knowledge dissemination. This generation, resistant to evolution, swims in a cool medium - the Web - without actually embracing it. New students and researchers entering an institution are asked to learn Word or LaTeX in order to document their work. We can ask them to learn HTML (possibly with RDF as well) instead. The new generation

of academics will open up a new phase for the Web Science community and set a revised agenda to pioneer the field and its practices. This is not about publishing and consuming research knowledge in Webby methods (that is really only a part of the big picture), but diving right into the essence of understanding and welcoming decentralisation, interoperability, data responsibility, accessibility, user experience, and a true sense of linking and building on ideas that can be tracked and discovered. Perhaps most importantly, helping us to better understand the effects of what we do, and the how the Web is changing societies.

Due to the divergence from the practices described by the Web Science framework, we need to re-examine our mode of work. The community should be preparing for a paradigm shift, checking the fundamental shared assumptions about the current paradigm in use. What will shortly follow is a state of crisis as the faith in conducting archaic practices will be shaken. The community will then reach a pre-paradigm phase where the new perspective (let’s call it Linked Research for now?) will be further explored. The revolution will become invisible when scientists return to their daily activities, better using and incorporating the Web and what it has to offer.

We need to be prepared to answer questions like Which scholarly articles are relevant to my research problem?

, How can we find and compare the variables of similar hypothesis?

, Which other researchers might find my results/output directly useful so I can notify them?

, What are some of the unanswered questions or new challenges in a research area?

, What are the research gaps?

, How do we verify a peer-review and credit the peer-reviewer?

, How do we control, store, preserve research?

, When do I get my free lunch?

If trying to achieve space travel was based on our ability to jump, we would have reinvented the trampoline. Therefore, in the spirit of the Web, we ought to strive to change the status of Web Science from 404 Not Found to 200 OK!

Acknowledgements

Interactions

2 interactions

Sarven Capadisli replied on

Sarven Capadisli replied on

This image will be updated from time to time to include the changes, e.g., social interactions, on this resource.

Aastha Madaan replied on

The paper presents an interesting research problem of decentralized scholarly communication. It takes the Semantic Web approach towards it and emphasizes on the role of Web Science. The paper presents on overview of the research challenges, the need for such an architecture or protocol for publishing. While the arguments for Why are strong they become weak for how this will be done. While the paper describes the support from a Semantic Web perspective for addressing the granularity and vocabulary required to structure a research paper. A large number of problems are either overlooked or superficially discussed. The accountability of reviewers is discussed but issues with publications such as plagiarism, impact of research is not discussed. The dissemination and adoptability of such a protocol is a huge challenge which is not much discussed in the paper.

Some issues which are important to be addressed are as below

A transparent peer-review process as well as the discussions around it can be seen as an indicator of peer-review quality.

How will the arguments and discussion be moderated, remain objective and who will decide the stopping point.- It will be interesting to get authors point of view on the influence of different administrative jurisdictions of web archives and how will that play a role in distributed archives of decentralization

- Also, I would be happy if the authors could explain how will it be ensured that if the version is accepted it wouldn’t be changed edited and removed from the Web

- For transparent reviews – what will be the incentive mechanism will it be some scholarly currency and then how is it regulated or standardized in the system.

- How will such papers be distinguished from blog posts which a number of researchers in any area write from a rigorous technical study (academic paper).

- The research paper seems to mention all key terms in Web science, semantic web, social machines, ontologies, rdfs etc.

- Minor comments:

with 53 authors (that is not a typo)

,eating one’s own dogfood

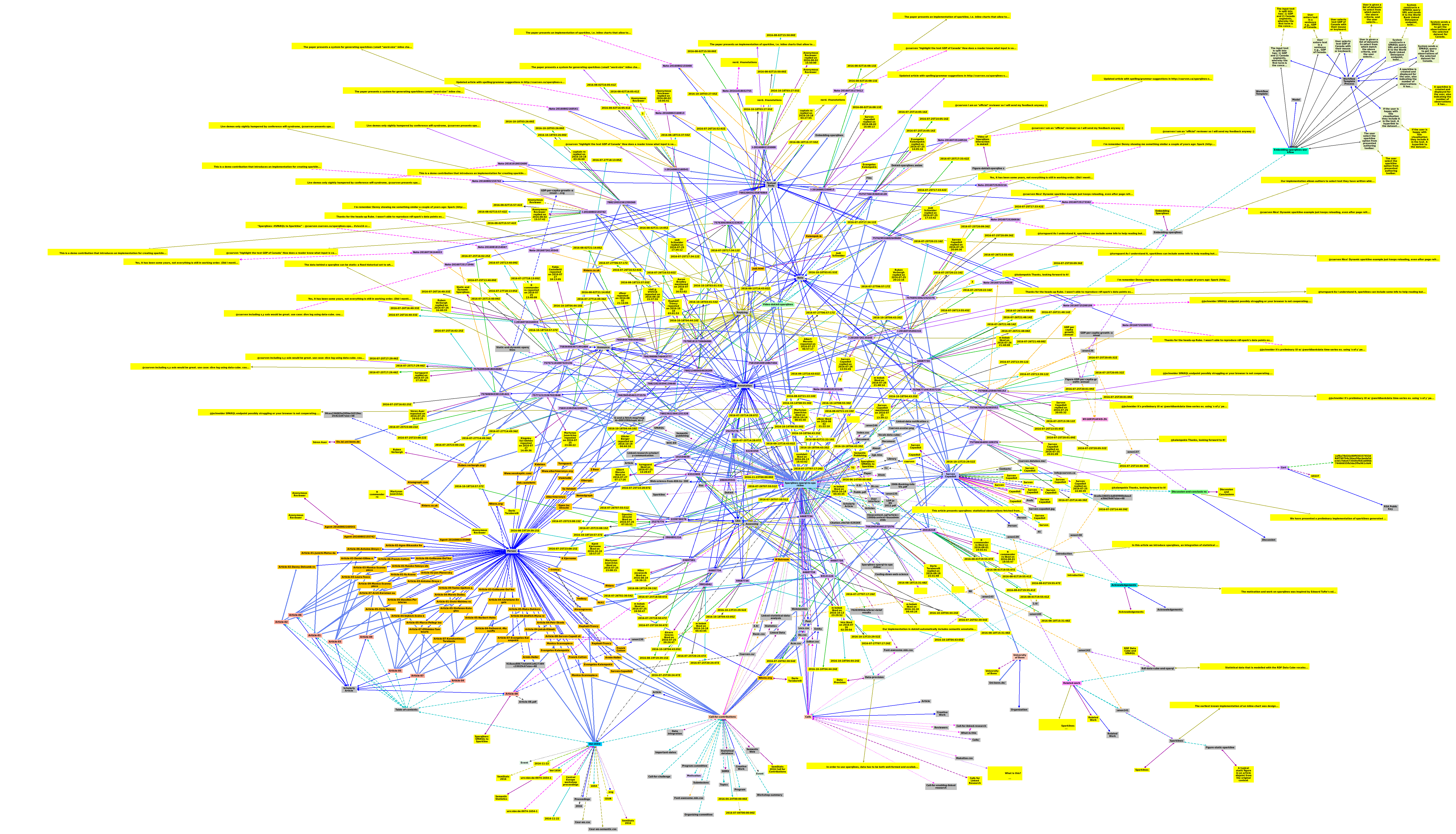

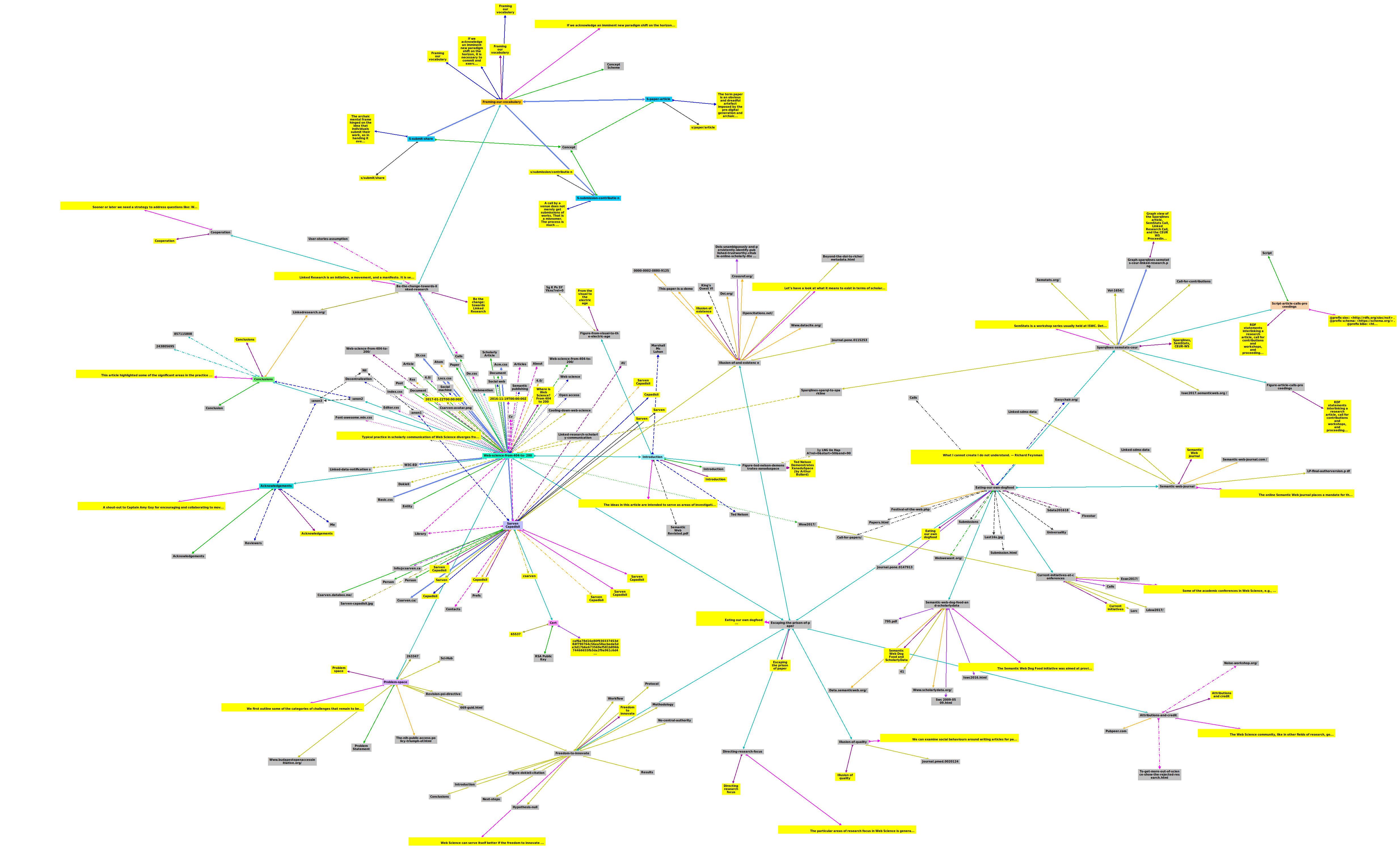

these casual slangs could be avoided and written in a more formal way- Graph view of the Sparqlines article, SemStats Call, Linked Research Call, and the CEUR-WS Proceeding generated using is difficult to read and no explanation given.

- No sections have been marked, no page length can be understood, any basis for skipping this or mapping this to the idea proposed in the paper.

- A bit of coherency in content would greatly improve the readability and comprehensibility of the concepts mentioned in the paper.